The End of the University as We Know It - Nathan Harden - The American Interest Magazine

In fifty years, if not much sooner, half of the roughly 4,500 colleges and universities now operating in the United States will have ceased to exist. The technology driving this change is already at work, and nothing can stop it. The future looks like this: Access to college-level education will be free for everyone; the residential college campus will become largely obsolete; tens of thousands of professors will lose their jobs; the bachelor's degree will become increasingly irrelevant; and ten years from now Harvard will enroll ten million students.

We've all heard plenty about the "college bubble" in recent years. Student loan debt is at an all-time high—an average of more than $23,000 per graduate by some counts—and tuition costs continue to rise at a rate far outpacing inflation, as they have for decades. Credential inflation is devaluing the college degree, making graduate degrees, and the greater debt required to pay for them, increasingly necessary for many people to maintain the standard of living they experienced growing up in their parents' homes. Students are defaulting on their loans at an unprecedented rate, too, partly a function of an economy short on entry-level professional positions. Yet, as with all bubbles, there's a persistent public belief in the value of something, and that faith in the college degree has kept demand high.

The figures are alarming, the anecdotes downright depressing. But the real story of the American higher-education bubble has little to do with individual students and their debts or employment problems. The most important part of the college bubble story—the one we will soon be hearing much more about—concerns the impending financial collapse of numerous private colleges and universities and the likely shrinkage of many public ones. And when that bubble bursts, it will end a system of higher education that, for all of its history, has been steeped in a culture of exclusivity. Then we'll see the birth of something entirely new as we accept one central and unavoidable fact: The college classroom is about to go virtual.

W

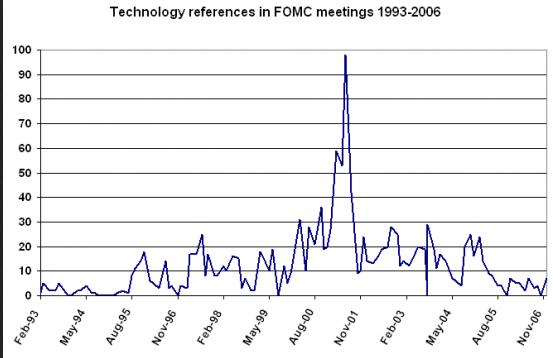

e are all aware that the IT revolution is having an impact on education, but we tend to appreciate the changes in isolation, and at the margins. Very few have been able to exercise their imaginations to the point that they can perceive the systemic and structural changes ahead, and what they portend for the business models and social scripts that sustain the status quo. That is partly because the changes are threatening to many vested interests, but also partly because the human mind resists surrender to upheaval and the anxiety that tends to go with it. But resist or not, major change is coming. The live lecture will be replaced by streaming video. The administration of exams and exchange of coursework over the internet will become the norm. The push and pull of academic exchange will take place mainly in interactive online spaces, occupied by a new generation of tablet-toting, hyper-connected youth who already spend much of their lives online. Universities will extend their reach to students around the world, unbounded by geography or even by time zones. All of this will be on offer, too, at a fraction of the cost of a traditional college education.

How do I know this will happen? Because recent history shows us that the internet is a great destroyer of any traditional business that relies on the sale of information. The internet destroyed the livelihoods of traditional stock brokers and bonds salesmen by throwing open to everyone access to the proprietary information they used to sell. The same technology enabled bankers and financiers to develop new products and methods, but, as it turned out, the experience necessary to manage it all did not keep up. Prior to the Wall Street meltdown, it seemed absurd to think that storied financial institutions like Bear Stearns and Lehman Brothers could disappear seemingly overnight. Until it happened, almost no one believed such a thing was possible. Well, get ready to see the same thing happen to a university near you, and not for entirely dissimilar reasons.

The higher-ed business is in for a lot of pain as a new era of creative destruction produces a merciless shakeout of those institutions that adapt and prosper from those that stall and die. Meanwhile, students themselves are in for a golden age, characterized by near-universal access to the highest quality teaching and scholarship at a minimal cost. The changes ahead will ultimately bring about the most beneficial, most efficient and most equitable access to education that the world has ever seen. There is much to be gained. We may lose the gothic arches, the bespectacled lecturers, dusty books lining the walls of labyrinthine libraries—wonderful images from higher education's past. But nostalgia won't stop the unsentimental beast of progress from wreaking havoc on old ways of doing things. If a faster, cheaper way of sharing information emerges, history shows us that it will quickly supplant what came before. People will not continue to pay tens of thousands of dollars for what technology allows them to get for free.

Technology will also bring future students an array of new choices about how to build and customize their educations. Power is shifting away from selective university admissions officers into the hands of educational consumers, who will soon have their choice of attending virtually any university in the world online. This will dramatically increase competition among universities. Prestigious institutions, especially those few extremely well-endowed ones with money to buffer and finance change, will be in a position to dominate this virtual, global educational marketplace. The bottom feeders—the for-profit colleges and low-level public and non-profit colleges—will disappear or turn into the equivalent of vocational training institutes. Universities of all ranks below the very top will engage each other in an all-out war of survival. In this war, big-budget universities carrying large transactional costs stand to lose the most. Smaller, more nimble institutions with sound leadership will do best.

T

his past spring, Harvard and MIT got the attention of everyone in the higher ed business when they announced a new online education venture called edX. The new venture will make online versions of the universities' courses available to a virtually unlimited number of enrollees around the world. Think of the ramifications: Now anyone in the world with an internet connection can access the kind of high-level teaching and scholarship previously available only to a select group of the best and most privileged students. It's all part of a new breed of online courses known as "massive open online courses" (MOOCs), which are poised to forever change the way students learn and universities teach.

One of the biggest barriers to the mainstreaming of online education is the common assumption that students don't learn as well with computer-based instruction as they do with in-person instruction. There's nothing like the personal touch of being in a classroom with an actual professor, says the conventional wisdom, and that's true to some extent. Clearly, online education can't be superior in all respects to the in-person experience. Nor is there any point pretending that information is the same as knowledge, and that access to information is the same as the teaching function instrumental to turning the former into the latter. But researchers at Carnegie Mellon's Open Learning Initiative, who've been experimenting with computer-based learning for years, have found that when machine-guided learning is combined with traditional classroom instruction, students can learn material in half the time. Researchers at Ithaka S+R studied two groups of students—one group that received all instruction in person, and another group that received a mixture of traditional and computer-based instruction. The two groups did equally well on tests, but those who received the computer instruction were able to learn the same amount of material in 25 percent less time.

The real value of MOOCs is their scalability. Andrew Ng, a Stanford computer science professor and co-founder of an open-source web platform called Coursera (a for-profit version of edX), got into the MOOC business after he discovered that thousands of people were following his free Stanford courses online. He wanted to capitalize on the intense demand for high-quality, open-source online courses. A normal class Ng teaches at Stanford might enroll, at most, several hundred students. But in the fall of 2011 his online course in machine learning enrolled 100,000. "To reach that many students before", Ng explained to Thomas Friedman of the New York Times, "I would have had to teach my normal Stanford class for 250 years."

Based on the popularity of the MOOC offerings online so far, we know that open-source courses at elite universities have the potential to serve enormous "classes." An early MIT online course called "Circuits and Electronics" has attracted 120,000 registrants. Top schools like Yale, MIT and Stanford have been making streaming videos and podcasts of their courses available online for years, but MOOCs go beyond this to offer a full-blown interactive experience. Students can intermingle with faculty and with each other over a kind of higher-ed social network. Streaming lectures may be accompanied by short auto-graded quizzes. Students can post questions about course material to discuss with other students. These discussions unfold across time zones, 24 hours a day. In extremely large courses, students can vote questions up or down, so that the best questions rise to the top. It's like an educational amalgam of YouTube, Wikipedia and Facebook.

Among the chattering classes in higher ed, there is an increasing sense that we have reached a tipping point where new interactive web technology, coupled with widespread access to broadband internet service and increased student comfort interacting online, will send online education mainstream. It's easy to forget that only ten years ago Facebook didn't exist. Teens now approaching college age are members of the first generation to have grown up conducting a major part of their social lives online. They are prepared to engage with professors and students online in a way their predecessors weren't, and as time passes more and more professors are comfortable with the technology, too.

In the future, the primary platform for higher education may be a third-party website, not the university itself. What is emerging is a global marketplace where courses from numerous universities are available on a single website. Students can pick and choose the best offerings from each school; the university simply uploads the content. Coursera, for example, has formed agreements with Penn, Princeton, UC Berkeley, and the University of Michigan to manage these schools' forays into online education. On the non-profit side, MIT has been the nation's leader in pioneering open-source online education through its MITx platform, which launched last December and serves as the basis for the new edX platform.

H

old on there a minute, you might object. Just as information is not the same as knowledge, and auto-access is not necessarily auto-didactics, so taking a bunch of random courses does not a coherent university education make. Mere exposure, too, doesn't guarantee that knowledge has been learned. In other words, what about the justifiable function of majors and credentials?

MIT is the first elite university to offer a credential for students who complete its free, open-source online courses. (The certificate of completion requires a small fee.) For the first time, students can do more than simply watch free lectures; they can gain a marketable credential—something that could help secure a raise or a better job. While edX won't offer traditional academic credits, Harvard and MIT have announced that "certificates of mastery" will be available for those who complete the online courses and can demonstrate knowledge of course material. The arrival of credentials, backed by respected universities, eliminates one of the last remaining obstacles to the widespread adoption of low-cost online education. Since edX is open source, Harvard and MIT expect other universities to adopt the same platform and contribute their own courses. And the two universities have put $60 million of their own money behind the project, making edX the most promising MOOC venture out there right now.

Anant Agarwal, an MIT computer science professor and edX's first president, told the Los Angeles Times, "MIT's and Harvard's mission is to provide affordable education to anybody who wants it." That's a very different mission than elite schools like Harvard and MIT have had for most of their existence. These schools have long focused on educating the elite—the smartest and, often, the wealthiest students in the world. But Agarwal's statement is an indication that, at some level, these institutions realize that the scalability and economic efficiency of online education allow for a new kind of mission for elite universities. Online education is forcing elite schools to re-examine their priorities. In the future, they will educate the masses as well as the select few. The leaders of Harvard and MIT have founded edX, undoubtedly, because they realize that these changes are afoot, even if they may not yet grasp just how profound those changes will be.

And what about the social experience that is so important to college? Students can learn as much from their peers in informal settings as they do from their professors in formal ones. After college, networking with fellow alumni can lead to valuable career opportunities. Perhaps that is why, after the launch of edX, the presidents of both Harvard and MIT emphasized that their focus would remain on the traditional residential experience. "Online education is not an enemy of residential education", said MIT president Susan Hockfield.

Yet Hockfield's statement doesn't hold true for most less wealthy universities. Harvard and MIT's multi-billion dollar endowments enable them to support a residential college system alongside the virtually free online platforms of the future, but for other universities online education poses a real threat to the residential model. Why, after all, would someone pay tens of thousands of dollars to attend Nowhere State University when he or she can attend an online version of MIT or Harvard practically for free?

This is why those middle-tier universities that have spent the past few decades spending tens or even hundreds of millions to offer students the Disneyland for Geeks experience are going to find themselves in real trouble. Along with luxury dorms and dining halls, vast athletic facilities, state of the art game rooms, theaters and student centers have come layers of staff and non-teaching administrators, all of which drives up the cost of the college degree without enhancing student learning. The biggest mistake a non-ultra-elite university could make today is to spend lavishly to expand its physical space. Buying large swaths of land and erecting vast new buildings is an investment in the past, not the future. Smart universities should be investing in online technology and positioning themselves as leaders in the new frontier of open-source education. Creating the world's premier, credentialed open online education platform would be a major achievement for any university, and it would probably cost much less than building a new luxury dorm.

Even some elite universities may find themselves in trouble in this regard, despite their capacity, as noted, to retain the residential norm. In 2007 Princeton completed construction on a new $136 million luxury dormitory for its students—all part of an effort to expand its undergraduate enrollment. Last year Yale finalized plans to build new residential dormitories at a combined cost of $600 million. The expansion will increase the size of Yale's undergraduate population by about 1,000. The project is so expensive that Yale could actually buy a three-bedroom home in New Haven for every new student it is bringing in and still save $100 million. In New York City, Columbia stirred up controversy by seizing entire blocks of Harlem by force of eminent domain for a project with a $6.3 billion price tag. Not to be outdone, Columbia's downtown neighbor, NYU, announced plans to buy up six million square feet of debt-leveraged space in one of the most expensive real estate markets in the world, at an estimated cost of $6 billion. The University of Pennsylvania has for years been expanding all over West Philadelphia like an amoeba gone real-estate insane. What these universities are doing is pure folly, akin to building a compact disc factory in the late 1990s. They are investing in a model that is on its way to obsolescence. If these universities understood the changes that lie ahead, they would be selling off real estate, not buying it—unless they prefer being landlords to being educators.

Now, because the demand for college degrees is so high (whether for good reasons or not is not the question for the moment), and because students and the parents who love them are willing to take on massive debt in order to obtain those degrees, and because the government has been eager to make student loans easier to come by, these universities and others have, so far, been able to keep on building and raising prices. But what happens when a limited supply of a sought-after commodity suddenly becomes unlimited? Prices fall. Yet here, on the cusp of a new era of online education, that is a financial reality that few American universities are prepared to face.

The era of online education presents universities with a conflict of interests—the goal of educating the public on one hand, and the goal of making money on the other. As Burck Smith, CEO of the distance-learning company StraighterLine, has written, universities have "a public-sector mandate" but "a private-sector business model." In other words, raising revenues often trumps the interests of students. Most universities charge as much for their online courses as they do for their traditional classroom courses. They treat the savings of online education as a way to boost profit margins; they don't pass those savings along to students.

One potential source of cost savings for lower-rung colleges would be to draw from open-source courses offered by elite universities. Community colleges, for instance, could effectively outsource many of their courses via MOOCs, becoming, in effect, partial downstream aggregators of others' creations, more or less like newspapers have used wire services to make up for a decline in the number of reporters. They could then serve more students with fewer faculty, saving money for themselves and students. At a time when many public universities are facing stiff budget cuts and families are struggling to pay for their kids' educations, open-source online education looks like a promising way to reduce costs and increase the quality of instruction. Unfortunately, few college administrators are keen on slashing budgets, downsizing departments or taking other difficult steps to reduce costs. The past thirty years of constant tuition hikes at U.S. universities has shown us that much.

The biggest obstacle to the rapid adoption of low-cost, open-source education in America is that many of the stakeholders make a very handsome living off the system as is. In 2009, 36 college presidents made more than $1 million. That's in the middle of a recession, when most campuses were facing severe budget cuts. This makes them rather conservative when it comes to the politics of higher education, in sharp contrast to their usual leftwing political bias in other areas. Reforming themselves out of business by rushing to provide low- and middle-income students credentials for free via open-source courses must be the last thing on those presidents' minds.

Nevertheless, competitive online offerings from other schools will eventually force these "non-profit" institutions to embrace the online model, even if the public interest alone won't. And state governments will put pressure on public institutions to adopt the new open-source model, once politicians become aware of the comparable quality, broad access and low cost it offers.

C

onsidering the greater interactivity and global connectivity that future technology will afford, the gap between the online experience and the in-person experience will continue to close. For a long time now, the largest division within Harvard University has been the little-known Harvard Extension School, a degree-granting division within the Faculty of Arts and Sciences with minimal admissions standards and very low tuition that currently enrolls 13,000 students. The Extension School was founded for the egalitarian purpose of making the Harvard education available to the masses. Nevertheless, Harvard took measures to protect the exclusivity of its brand. The undergraduate degrees offered by the Extension School (Bachelor of Liberal Arts) are distinguished by name from the degrees the university awards through Harvard College (Bachelor of Arts). This model—one university, two types of degrees—offers a good template for Harvard's future, in which the old residential college model will operate parallel to the new online open-source model. The Extension School already offers more than 200 online courses for full academic credit.

Prestigious private institutions and flagship public universities will thrive in the open-source market, where students will be drawn to the schools with bigger names. This means, paradoxically, that prestigious universities, which will have the easiest time holding on to the old residential model, also have the most to gain under the new model. Elite universities that are among the first to offer robust academic programs online, with real credentials behind them, will be the winners in the coming higher-ed revolution.

There is, of course, the question of prestige, which implies selectivity. It's the primary way elite universities have distinguished themselves in the past. The harder it is to get in, the more prestigious a university appears. But limiting admissions to a select few makes little sense in the world of online education, where enrollment is no longer bounded by the number of seats in a classroom or the number of available dorm rooms. In the online world, the only concern is having enough faculty and staff on hand to review essays, or grade the tests that aren't automated, or to answer questions and monitor student progress online.

Certain valuable experiences will be lost in this new online era, as already noted. My own experience at Yale furnishes some specifics. Through its "Open Yale" initiative, Yale has been recording its lecture courses for several years now, making them available to the public free of charge. Anyone with an internet connection can go online and watch some of the same lectures I attended as a Yale undergrad. But that person won't get the social life, the long chats in the dinning hall, the feeling of collegiality, the trips around Long Island sound with the sailing team, the concerts, the iron-sharpens-iron debates around the seminar table, the rare book library, or the famous guest lecturers (although some of those events are streamed online, too). On the other hand, you can watch me and my fellow students take the stage to demonstrate a Hoplite phalanx in Donald Kagan's class on ancient Greek history. You can take a virtual seat next to me in one of Giuseppe Mazzota's unforgettable lectures on The Divine Comedy.

So while it can never duplicate the experience of a student with the good fortune to get into Yale, this is an historically significant development. Anyone who can access the internet—at a public library, for instance—no matter how poor or disadvantaged or isolated or uneducated he or she may be, can access the teachings of some of the greatest scholars of our time through open course portals. Technology is a great equalizer. Not everyone is willing or capable of taking advantage of these kinds of resources, but for those who are, the opportunity is there. As a society, we are experiencing a broadening of access to education equal in significance to the invention of the printing press, the public library or the public school.

O

nline education is like using online dating websites—fifteen years ago it was considered a poor substitute for the real thing, even creepy; now it's ubiquitous. Online education used to have a stigma, as if it were inherently less rigorous or less effective. Eventually for-profit colleges and public universities, which had less to lose in terms of snob appeal, led the charge in bringing online education into the mainstream. It's very common today for public universities to offer a menu of online courses to supplement traditional courses. Students can be enrolled in both types of courses simultaneously, and can sometimes even be enrolled in traditional classes at one university while taking an online course at another.

The open-source marketplace promises to offer students additional choices in the way they build their credentials. Colleges have long placed numerous restrictions on the number of credits a student can transfer in from an outside institution. In many cases, these restrictions appear useful for little more than protecting the university's bottom line. The open-source model will offer much more flexibility, though still maintain the structure of a major en route to obtaining a credential. Students who aren't interested in pursuing a traditional four-year degree, or in having any major at all, will be able to earn meaningful credentials one class at a time.

To borrow an analogy from the music industry, universities have previously sold education in an "album" package—the four-year bachelor's degree in a certain major, usually coupled with a core curriculum. The trend for the future will be more compact, targeted educational certificates and credits, which students will be able to pick and choose from to create their own academic portfolios. Take a math class from MIT, an engineering class from Purdue, perhaps with a course in environmental law from Yale, and create interdisciplinary education targeted to one's own interests and career goals. Employers will be able to identify students who have done well in specific courses that match their needs. When people submit résumés to potential employers, they could include a list of these individual courses, and their achievement in them, rather than simply reference a degree and overall GPA. The legitimacy of MOOCs in the eyes of employers will grow, then, as respected universities take the lead in offering open courses with meaningful credentials.

MOOCs will also be a great remedy to the increasing need for continuing education. It's worth noting that while the four-year residential experience is what many of us picture when we think of "college", the residential college experience has already become an experience only a minority of the nation's students enjoy. Adult returning students now make up a large mass of those attending university. Non-traditional students make up 40 percent of all college students. Together with commuting students, or others taking classes online, they show that the traditional residential college experience is something many students either can't afford or want. The for-profit colleges, which often cater to working adult students with a combination of night and weekend classes and online coursework, have tapped into the massive demand for practical and customized education. It's a sign of what is to come.

W

hat about the destruction these changes will cause? Think again of the music industry analogy. Today, when you drive down music row in Nashville, a street formerly dominated by the offices of record labels and music publishing companies, you see a lot of empty buildings and rental signs. The contraction in the music industry has been relentless since the Mp3 and the iPod emerged. This isn't just because piracy is easier now; it's also because consumers have been given, for the first time, the opportunity to break the album down into individual songs. They can purchase the one or two songs they want and leave the rest. Higher education is about to become like that.

For nearly a thousand years the university system has looked just about the same: professors, classrooms, students in chairs. The lecture and the library have been at the center of it all. At its best, traditional classroom education offers the chance for intelligent and enthusiastic students to engage a professor and one another in debate and dialogue. But typical American college education rarely lives up to this ideal. Deep engagement with texts and passionate learning aren't the prevailing characteristics of most college classrooms today anyway. More common are grade inflation, poor student discipline, and apathetic teachers rubber-stamping students just to keep them paying tuition for one more term.

If you ask students what they value most about the residential college experience, they'll often speak of the unique social experience it provides: the chance to live among one's peers and practice being independent in a sheltered environment, where many of life's daily necessities like cooking and cleaning are taken care of. It's not unlike what summer camp does at an earlier age. For some, college offers the chance to form meaningful friendships and explore unique extracurricular activities. Then, of course, there are the Animal House parties and hookups, which do take their toll: In their research for their book Academically Adrift, Richard Arum and Josipa Roksa found that 45 percent of the students they surveyed said they had no significant gains in knowledge after two years of college. Consider the possibility that, for the average student, traditional in-classroom university education has proven so ineffective that an online setting could scarcely be worse. But to recognize that would require unvarnished honesty about the present state of play. That's highly unlikely, especially coming from present university incumbents.

The open-source educational marketplace will give everyone access to the best universities in the world. This will inevitably spell disaster for colleges and universities that are perceived as second rate. Likewise, the most popular professors will enjoy massive influence as they teach vast global courses with registrants numbering in the hundreds of thousands (even though "most popular" may well equate to most entertaining rather than to most rigorous). Meanwhile, professors who are less popular, even if they are better but more demanding instructors, will be squeezed out. Fair or not, a reduction in the number of faculty needed to teach the world's students will result. For this reason, pursuing a Ph.D. in the liberal arts is one of the riskiest career moves one could make today. Because much of the teaching work can be scaled, automated or even duplicated by recording and replaying the same lecture over and over again on video, demand for instructors will decline.

Who, then, will do all the research that we rely on universities to do if campuses shrink and the number of full-time faculty diminishes? And how will important research be funded? The news here is not necessarily bad, either: Large numbers of very intelligent and well-trained people may be freed up from teaching to do more of their own research and writing. A lot of top-notch research scientists and mathematicians are terrible teachers anyway. Grant-givers and universities with large endowments will bear a special responsibility to make sure important research continues, but the new environment in higher ed should actually help them to do that. Clearly some kinds of education, such as training heart surgeons, will always require a significant amount of in-person instruction.

Big changes are coming, and old attitudes and business models are set to collapse as new ones rise. Few who will be affected by the changes ahead are aware of what's coming. Severe financial contraction in the higher-ed industry is on the way, and for many this will spell hard times both financially and personally. But if our goal is educating as many students as possible, as well as possible, as affordably as possible, then the end of the university as we know it is nothing to fear. Indeed, it's something to celebrate.